GenAI Azure OpenAI Inference API - Getting Started

Overview

Welcome to the GenAI Azure OpenAI Inference API documentation. This API is designed to provide robust Generative AI Inference capabilities, amplifying the embeddings and completions within our system through TrustNest's integration with Azure OpenAI. This document outlines the necessary information for understanding, interacting with, and execute your first API call using the Developer Portal.

GenAI Azure OpenAI Inference API is protected by MFA and requires a subscription key.

Requirements

- A valid subscription key for the GenAI Azure OpenAI Inference API (see Get a subscription key for Beta API Product).

If you are using a thalesgroup.com identity, please have look to the 2 troubleshooting doc:

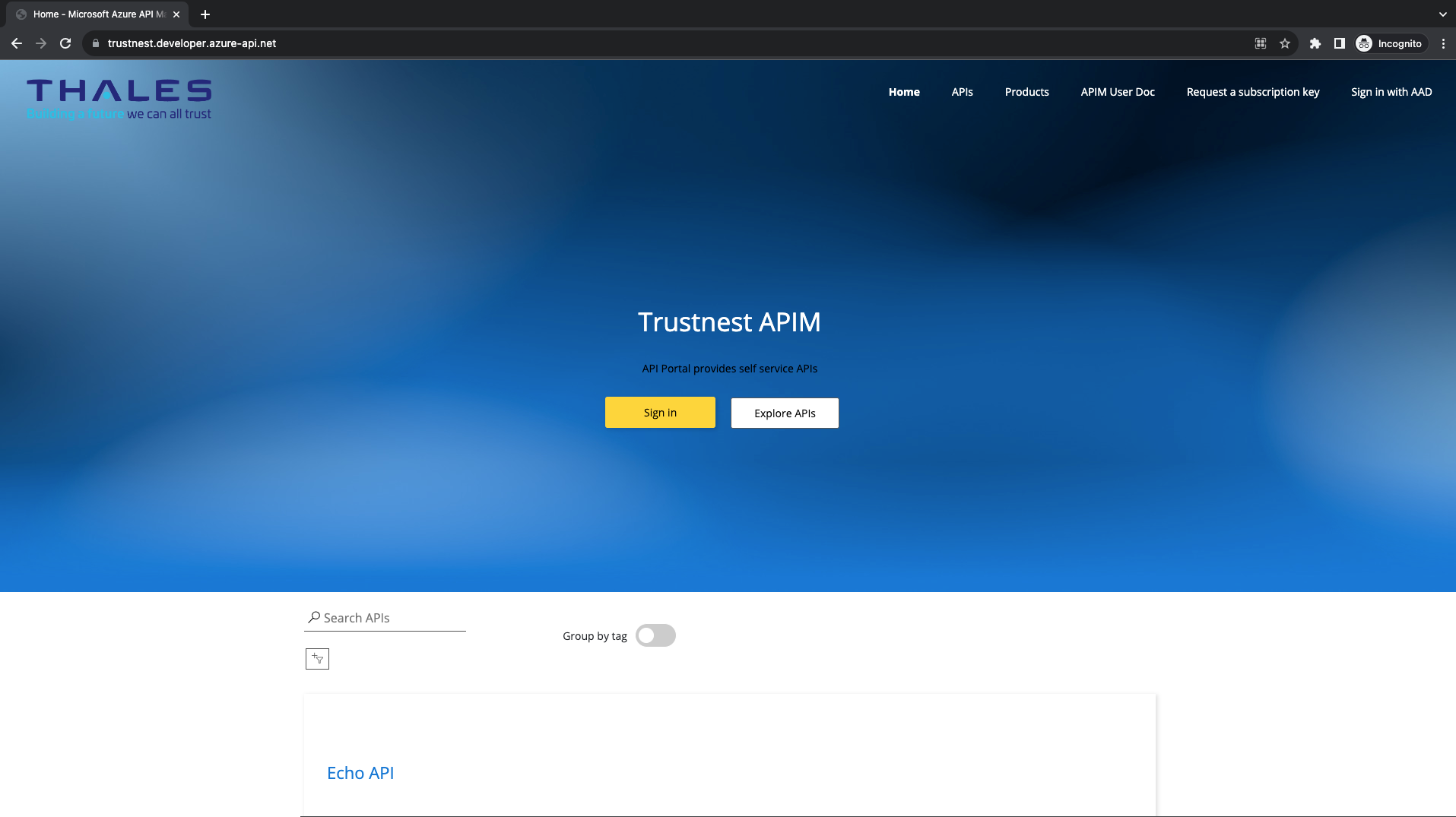

Access to Trustnest APIM

First, open your favorite browser and access to https://trustnest.developer.azure-api.net/

You should see :

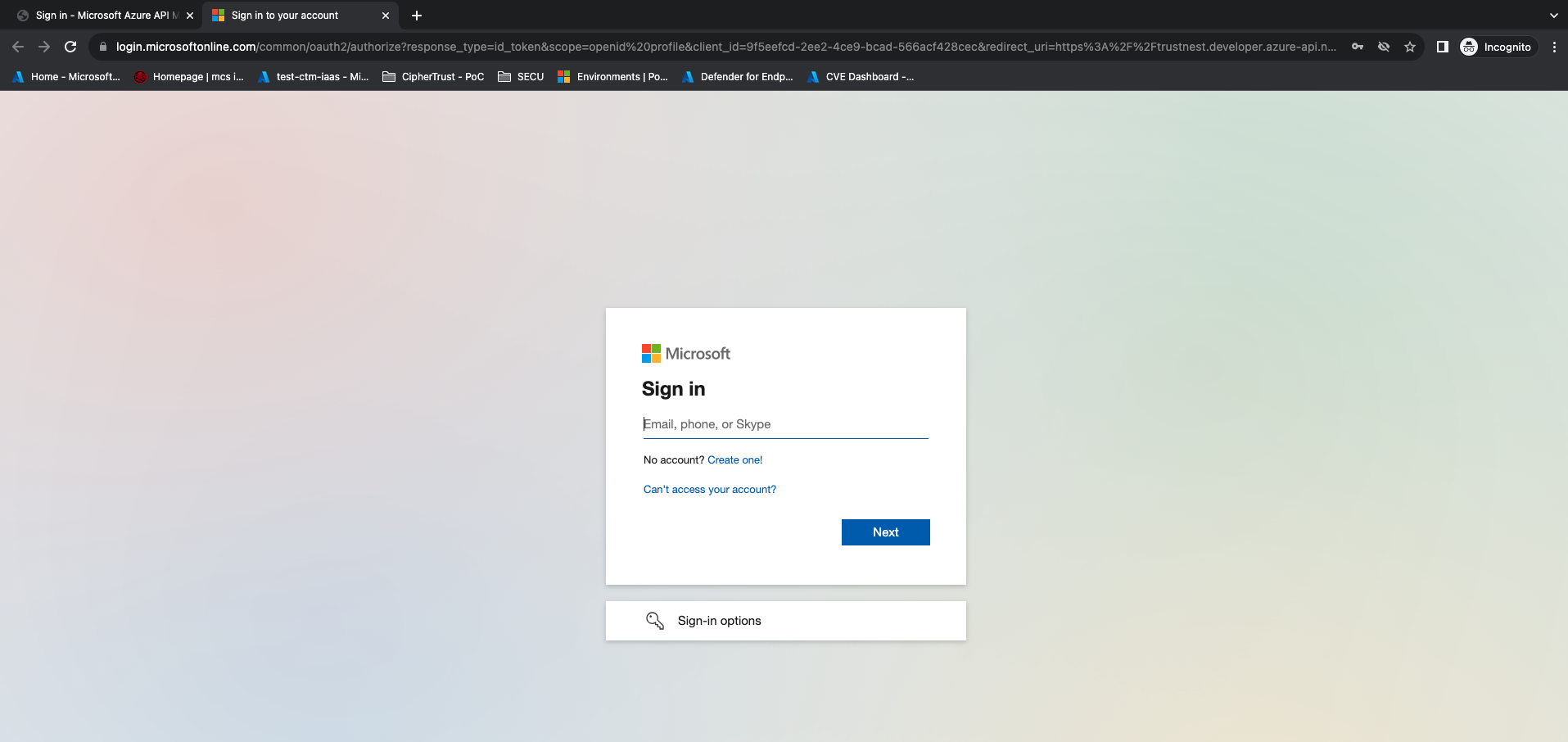

Sign in for the first time

Click on "Sign in" buttun or "Sign in with AAD" in the top menu:

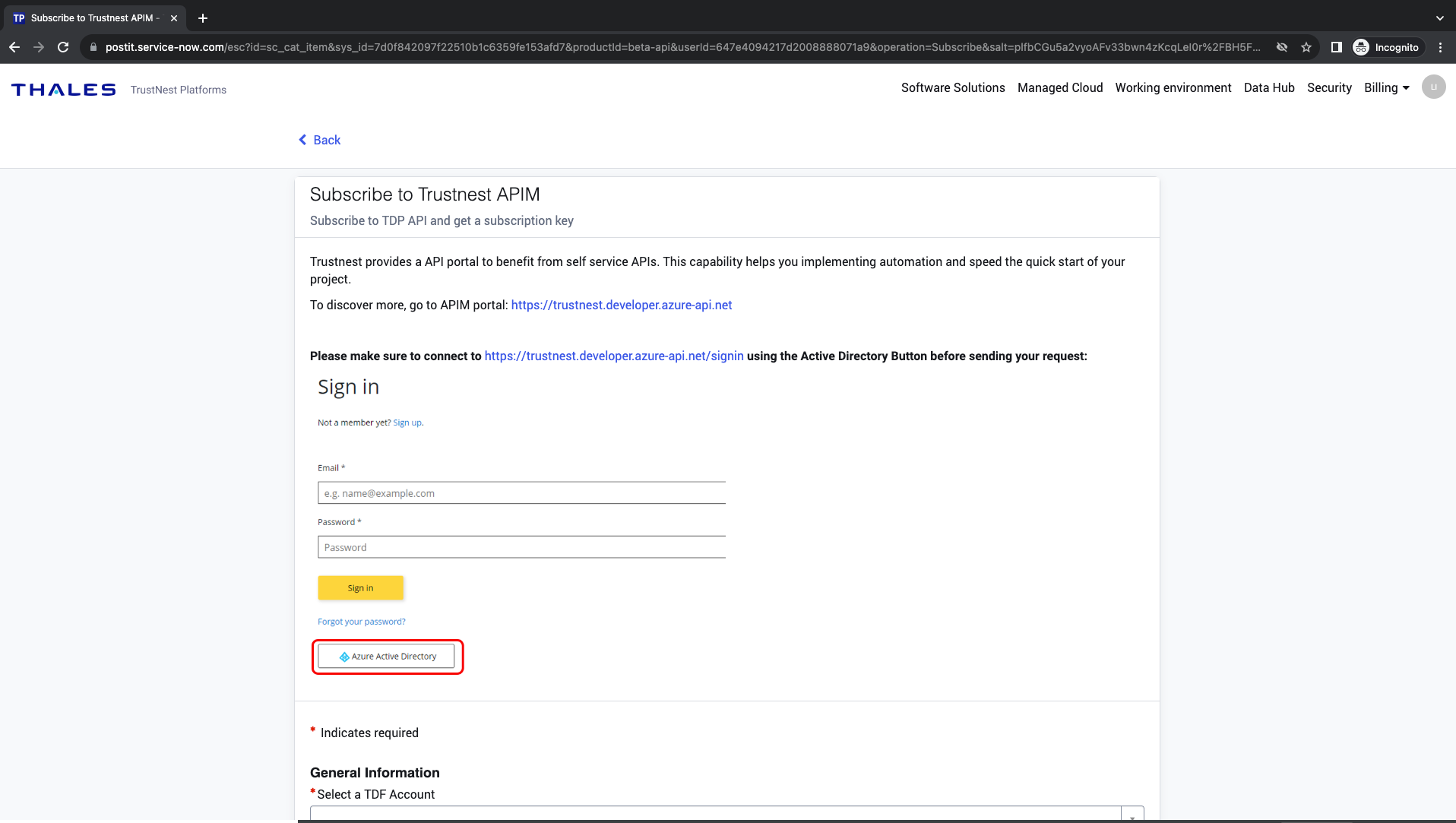

Click on "Azure Active Directory", you should be redirected to thalesdigital.io SSO:

During the first access, an additional step will ask you to fill/confirm your email. this is normal ! Give it and continue the steps.

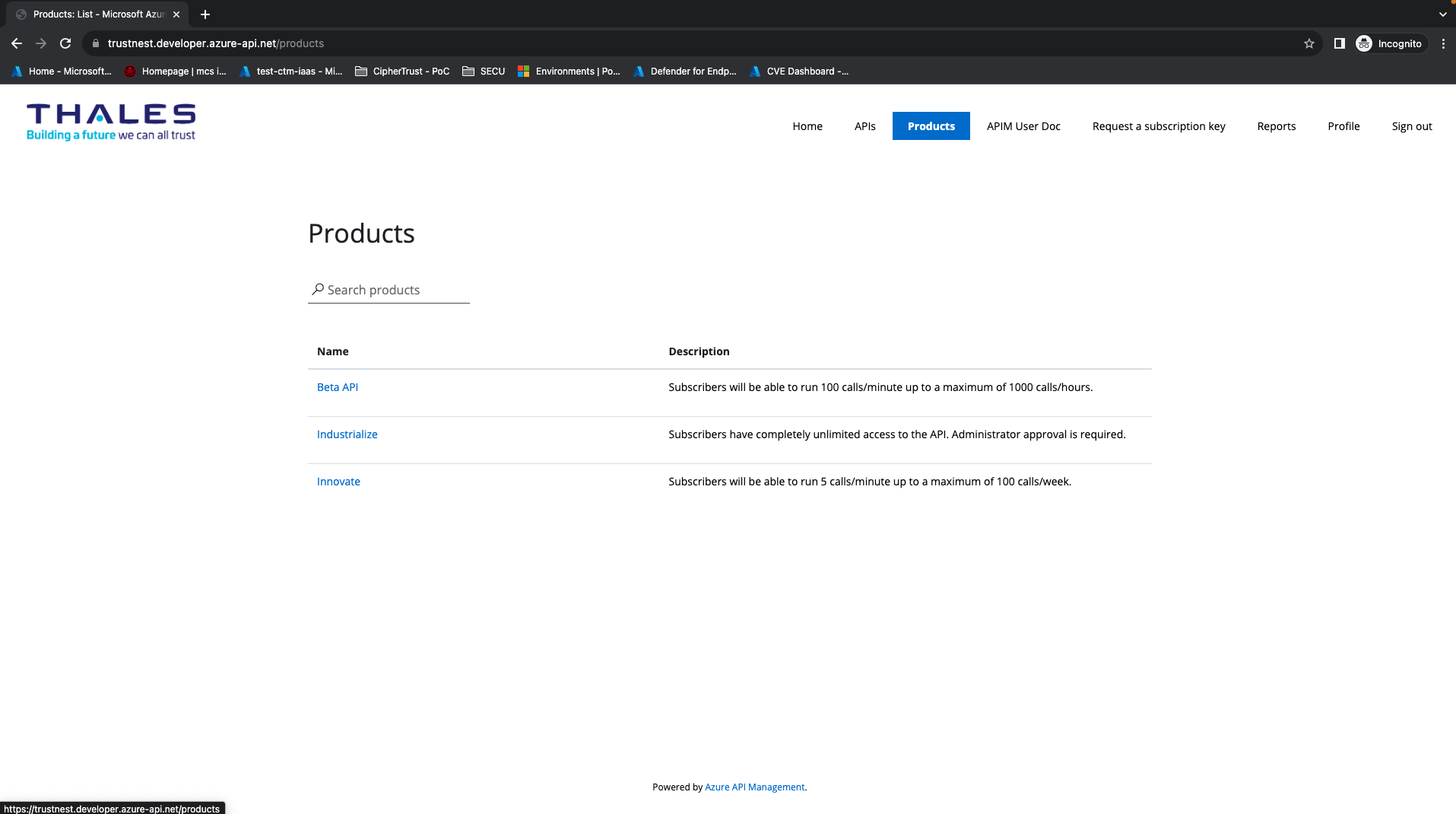

Get a subscription key for Beta API Product

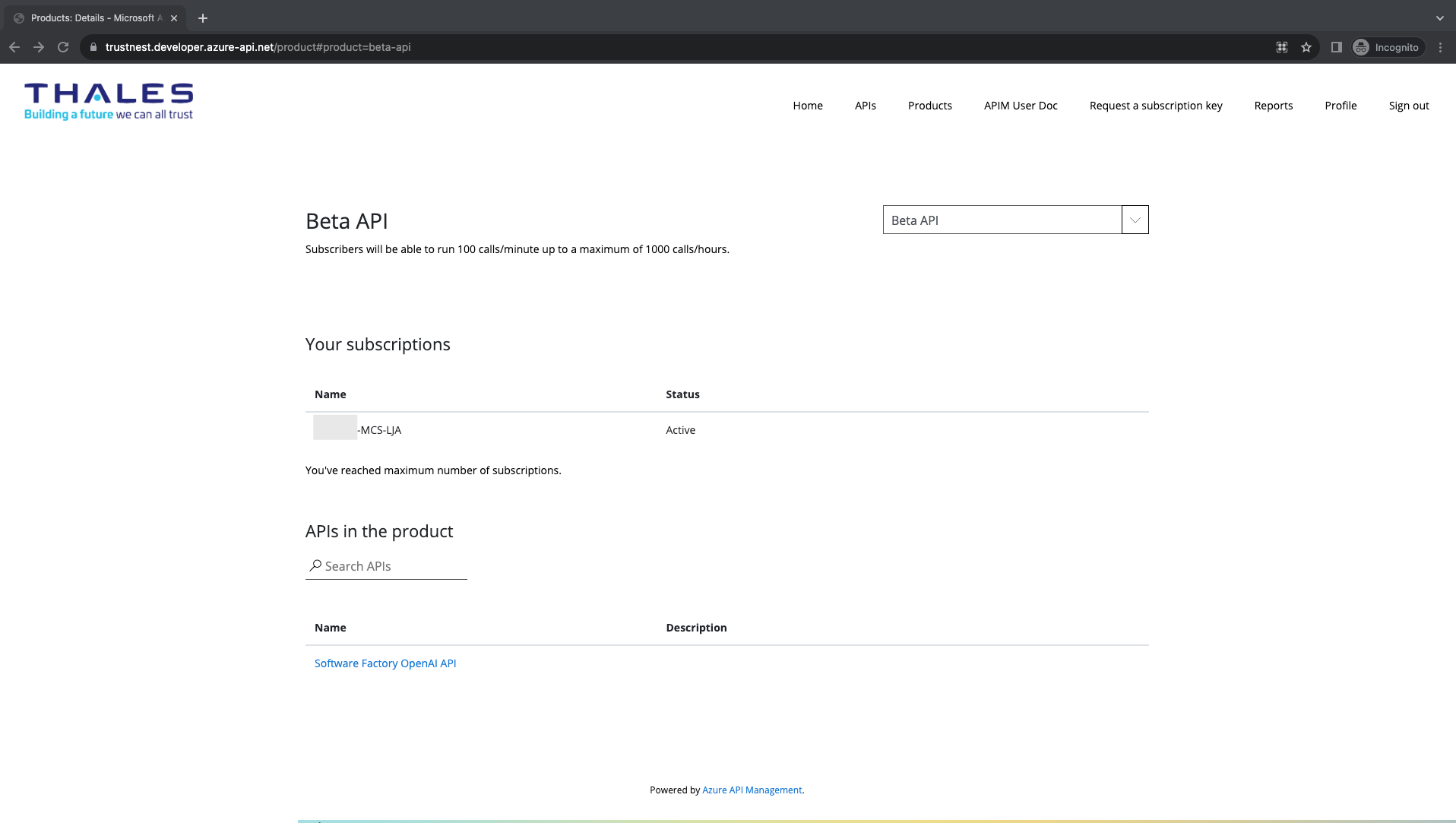

To access to an API, you should have a valid subscription key. Go to Product (on the top menu).

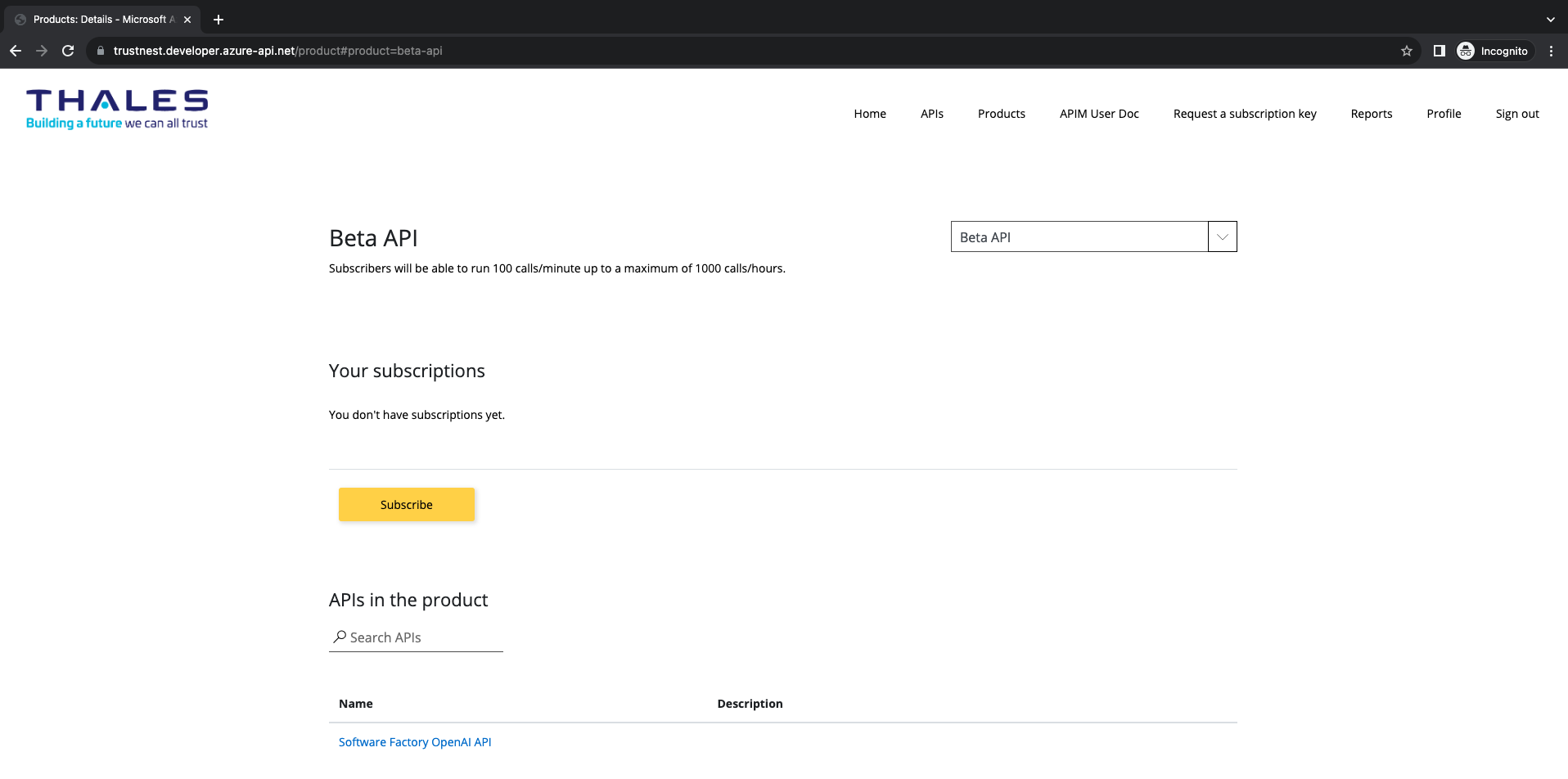

Select Beta API,

Click on "Subscribe" (Yellow Button), then you should be redirected to the postIT item: "Subscribe to Trustnest APIM":

Fill with:

- TDFaccountID

- APIM offer. For OpenAI: choose Openai API (APIM Beta API)

Click on Request

This item will follow the approval process and escalated to support level 2. A subscription key will be configured directly to APIM by level 2.

Once the subscription key is created, you should see it in the Product Page. Click on Beta API Product Page:

API Schema: GenAI Azure OpenAI Inference v1

Unavailable Endpoints

/deployments/{deployment-id}/completions(This endpoint is unavailable, consider using/deployments/{deployment-id}/chat/completionsinstead.)

Active Endpoints

-

/deployments/{deployment-id}/embeddings

Get a vector representation of a given input that can be easily consumed by machine learning models and algorithms. -

/deployments/{deployment-id}/chat/completions

Creates a completion for the chat message.

Available Azure OpenAI Models

| Model | Deployment Name | Decriptions | Version | Max Context Window |

|---|---|---|---|---|

| gpt-35-turbo | gpt35-4k | General-purpose model of the GPT-3 family | 0613 | 4k token limit |

| gpt-35-turbo-16k | gpt35-16k | General-purpose model of the GPT-3 family | 0613 | 16k token limit |

| gpt-4 | gpt4-8k | General-purpose model of the GPT-4 family | 0613 | 8k token limit |

| gpt-4-32k | gpt4-32k | General-purpose model of the GPT-4 family | 0613 | 32k token limit |

| text-embedding-ada-002 | text-embedding-ada-002 | Second-generation embedding model (denoted by -002 in the model ID) | 2 | 8k token limit |

Versioning

We support only the stable versions of Azure OpenAI API.

API versioning is handled via the api-version query parameter. The format is YYYY-MM-DD. The current supported Azure OpenAI API version is 2023-05-15.

API Endpoints

Utilizing Azure API Management Developer Portal

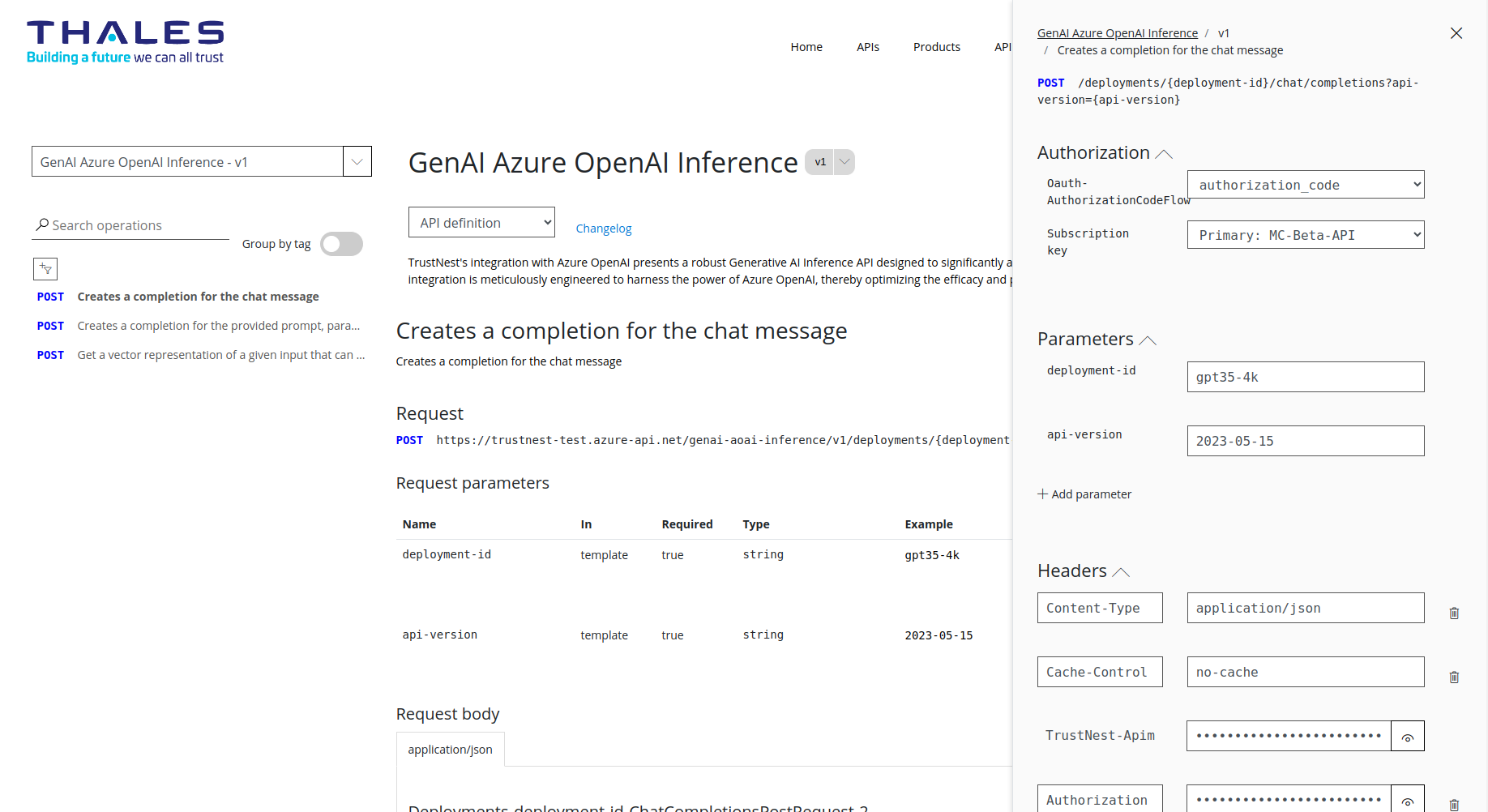

The Azure API Management Developer Portal is a web-based interface that allows you to interact with the API without having to write any code. This section will walk you through the steps to create a chat completion using the Developer Portal.

Choose GenAI Azure OpenAI Inference API from the top menu:

Authorization section:

- Authorization: Oauth-AuthorizationCodeFlow: Select "authorization_code" it will open a popup windows and get an AAD token using your active session (it's transparent for you).

- TrustNest-Apim-Subscription-Key: Your subscription key for the API (see Get a subscription key for Beta API Product)

Deployment ID: The deployment ID of the model you wish to use. This can be found in the Available Azure OpenAI Models under the "Deployment ID" column.

Api Version: The version of the API you wish to use. This can be found in the Versioning section.

1. Create Chat Completion

Endpoint: /deployments/{deployment-id}/chat/completions

Creates a chat completion based on the provided prompt, parameters, and chosen model.

Request

You can use the following example to create a chat completion:

POST /deployments/{deployment-id}/chat/completions?api-version={api-version} HTTP/1.1

Host: trustnest.azure-api.net/genai-aoai-inference/v1

Content-Type: application/json

Cache-Control: no-cache

TrustNest-Apim-Subscription-Key: <YOUR_SUBSCRIPTION_KEY>

Authorization: Bearer <YOUR_AAD_TOKEN>

{

"messages": [{

"role": "user",

"content": "Hello!"

}],

"model": "gpt-35-turbo",

"max_tokens": 25,

"temperature": 0.3,

"top_p": 1

}

Response

You should receive a response similar to the following:

HTTP/1.1 200 OK

{

"id": "chatcmpl-88bRZB7bJjhiViAvAsB538aZ1THw3",

"object": "chat.completion",

"created": 1697061249,

"model": "gpt-35-turbo",

"choices": [{

"index": 0,

"finish_reason": "length",

"message": {

"role": "assistant",

"content": "Hi there! How can I assist you today?"

}

}],

"usage": {

"completion_tokens": 5,

"prompt_tokens": 9,

"total_tokens": 14

}

}

2. Get Embeddings

Endpoint: /deployments/{deployment-id}/embeddings

Get a vector representation of a given input that can be easily consumed by machine learning models and algorithms.

Request

You can use the following example to get embeddings:

POST /deployments/{deployment-id}/embeddings?api-version={api-version} HTTP/1.1

Host: trustnest.azure-api.net/genai-aoai-inference/v1

Content-Type: application/json

Cache-Control: no-cache

Authorization: Bearer <YOUR_AAD_TOKEN>

TrustNest-Apim-Subscription-Key: <YOUR_SUBSCRIPTION_KEY>

{

"input": "This is a test.",

"user": "string",

"input_type": "query",

"model": "text-embedding-ada-002"

}

Response

Yopu should receive a response similar to the following:

HTTP/1.1 200 OK

{

"object": "list",

"data": [{

"object": "embedding",

"index": 0,

"embedding": [

-0.003542061,

-0.0042601773,

0.0010812181,

...

]

}],

"model": "ada",

"usage": {

"prompt_tokens": 5,

"total_tokens": 5

}

}

Note: The

text-embedding-ada-002output dimensions are 1536.

Quota Limits

Please refer to the table below for quota limits for each model. It is important to note that the quota limits are for all the users of the model, not just your organization.

| Model | Deployment Name | Quota (Tokens per minute) |

|---|---|---|

| gpt-35-turbo | gpt35-4k | 300 k |

| gpt-35-turbo-16k | gpt35-16k | 300 k |

| gpt-4 | gpt4-8k | 40 K |

| gpt-4-32k | gpt4-32k | 80 K |

| text-embedding-ada-002 | text-embedding-ada-002 | 350 K |

Changelog

| Date | Version | Description |

|---|---|---|

| 2021-10-01 | 1.0.0 | Initial |

Code Examples

To use the API in your favorite language, please refer to the following code examples:

Troubleshooting

Should you encounter issues while interacting with the Azure OpenAI services via TrustNest APIM, please refer to the Troubleshooting section for guidance on identifying and resolving common problems.